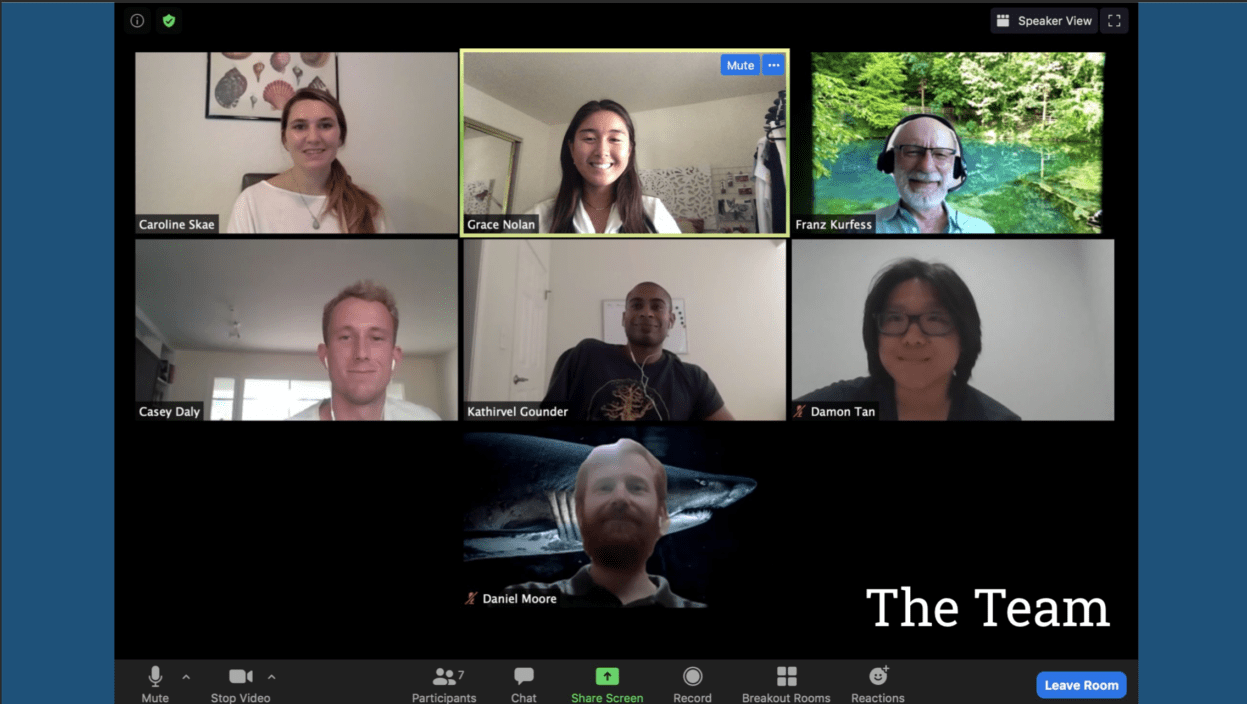

Our Team

Our Shark Spotting team is made up of Cal Poly students, faculty, and alumni with a variety of backgrounds.

Caroline Skae

Volunteer

Caroline Skae recently graduated with a B.S. in general engineering where she completed an individual course study in Marine Conservation. She is an avid diver, sailor and ocean advocate. She aims to bridge the gap between technology and ocean science in order to create cleaner and healthier oceans!

Kathir Gounder

SURP Student

Computer Science Senior and Undergraduate Researcher at Cal Poly. Kathir was a visiting student and machine learning researcher at UC Berkeley last summer and is also involved in many artificial intelligence related activities on campus.

Grace Nolan

SURP Student

Grace is a third year Computer Science student and Undergraduate Researcher at Cal Poly. Her experience and areas of interest are in artificial intelligence and UI/UX design.

Casey Daily

Volunteer

Casey is a 2020 graduate of Cal Poly, who currently works in the Bay Area. His technical interests include back end development and machine learning, and is currently planning on going back to school to obtain his masters. Outside of work and doing research, he enjoys surfing, biking, and playing basketball.

Franz Kurfess

Project Advisor

Franz J. Kurfess joined the Computer Science Department of California Polytechnic State University in 2000 and has a M.S abd Ph.D. in Computer Science from the Technical University in Munich. His main areas of research are Artificial Intelligence and Human-Computer Interaction, and he mainly teaches courses in those two areas.

Damon Tan

Volunteer

Damon Tan is a second year undergraduate studying biomedical engineering at Cal Poly San Luis Obispo. He is from Rosemead, California near Los Angeles and enjoys fishing and surfing at the beach as well as flying drones.

Daniel Moore

Volunteer

Before attending Cal Poly, Daniel worked as a Java eCommerce developer for clients ranging from startups to Fortune 1000 companies. With 2 bachelor’s in International Studies and Computer Science from Baylor University, Daniel is pursuing his Master of Computer Science from Cal Poly. On the Cal Poly Shark Spotting Project, Daniel has been researching object detection methods using TensorFlow.

Our team comes from a diverse background of engineering, computer science and marine science. San Luis Obispo’s beautiful coastline has allowed many of us to explore the ocean for ourselves through surfing, diving and swimming. We are invested in utilizing technology to learn more about our coastline and enhance the safety of all ocean goer’s.

Acknowledgements

Thank you to Nic and Sara Johnson for their sponsorship of this project, Chris Lowe and Patrick Rex from the CSU Long Beach Shark Lab for their footage, knowledge, and collaboration and, Jeanine Scaramozzino and Russ Whitefrom Cal Poly’s Kennedy Library for their time, resources and contributions.

Our Project Video

Our Presentation Slides

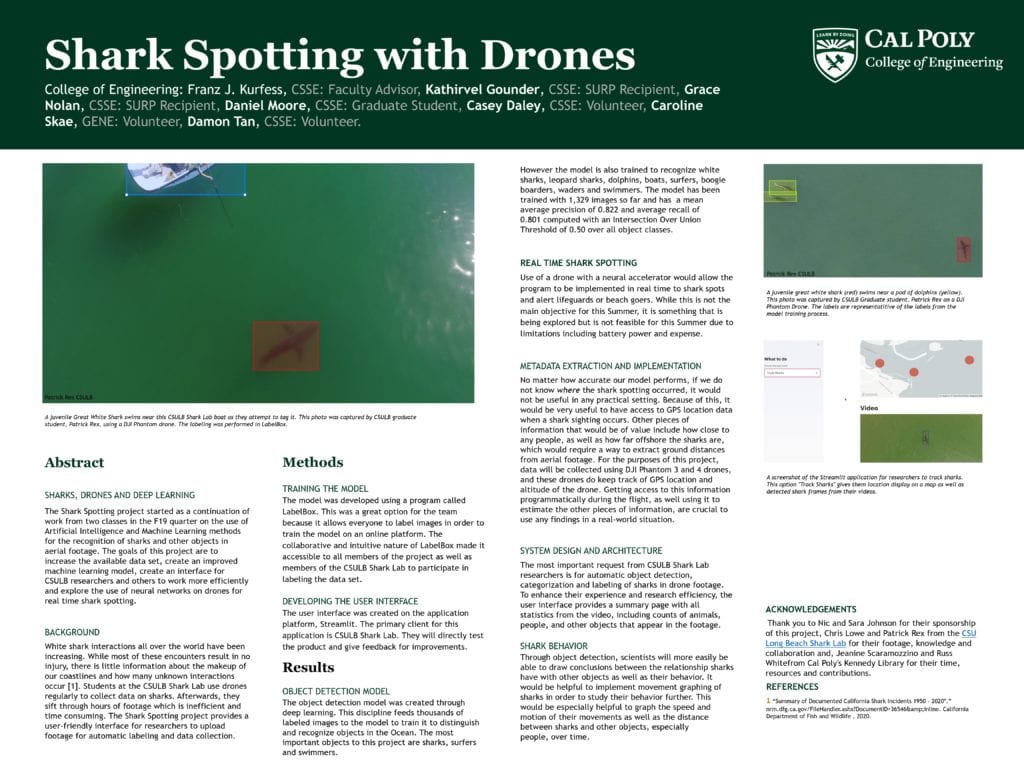

Our Project's Digital Poster

Abstract

Sharks, Drones, and Deep Learning

The Shark Spotting project started as a continuation of work from two classes in the F19 quarter on the use of Artificial Intelligence and Machine Learning methods for the recognition of sharks and other objects in aerial footage. The goals of this project are to increase the available data set, create an improved machine learning model, create an interface for CSULB researchers and others to work more efficiently and explore the use of neural networks on drones for real time shark spotting.

Background

White shark interactions all over the world have been increasing. While most of these encounters result in no injury, there is little information about the makeup of our coastlines and how many unknown interactions occur [1]. Students at the CSULB Shark Lab use drones regularly to collect data on sharks. Afterwards, they sift through hours of footage which is inefficient and time consuming. The Shark Spotting project provides a user-friendly interface for researchers to upload footage for automatic labeling and data collection.

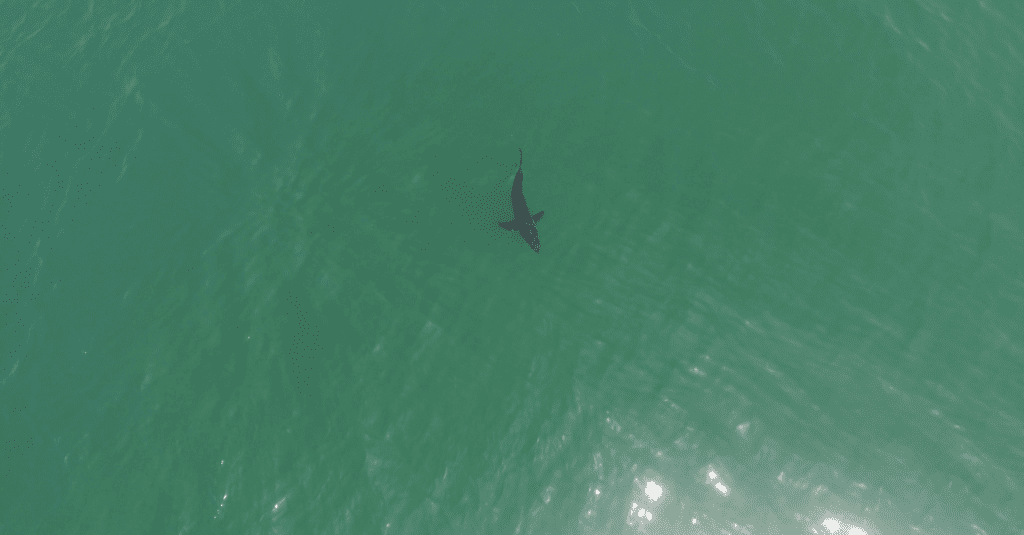

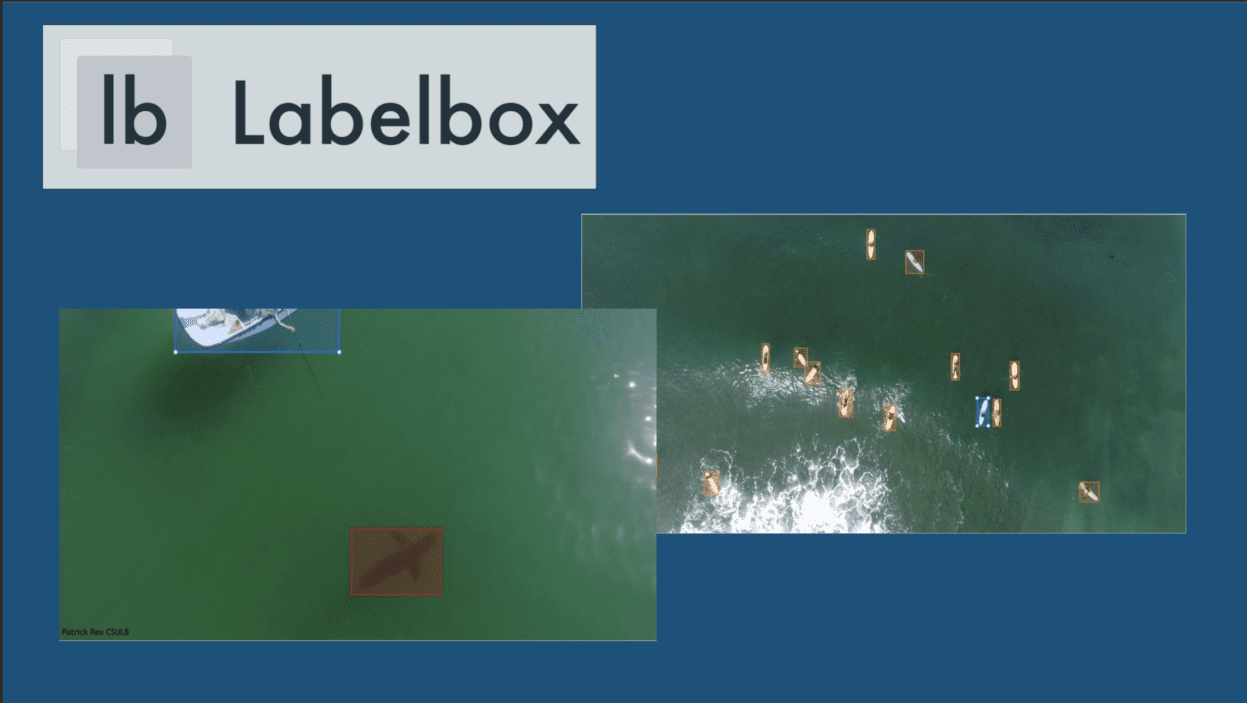

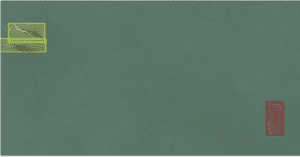

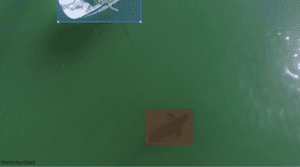

A juvenile great white shark (red) swims near a pod of dolphins (yellow). This photo was captured by CSULB Graduate student, Patrick Rex on a DJI Phantom Drone. The labels are representative of the labels from the model training process.

Shark Behavior

Through object detection, scientists will more easily be able to draw conclusions between the relationship sharks have with other objects as well as their behavior. It would be helpful to implement movement graphing of sharks in order to study their behavior further. This would be especially helpful to graph the speed and motion of their movements as well as the distance between sharks and other objects, especially people, over time.

Refrences

1 “Summary of Documented California Shark Incidents 1950 – 2020*.” nrm.dfg.ca.gov/FileHandler.ashx?DocumentID=36546&inline. California Department of Fish and Wildlife , 2020.

A juvenile Great White Shark swims near this CSULB Shark Lab boat as they attempt to tag it. This photo was captured by CSULB graduate student, Patrick Rex, using a DJI Phantom drone. The labeling was performed in LabelBox.

Methods

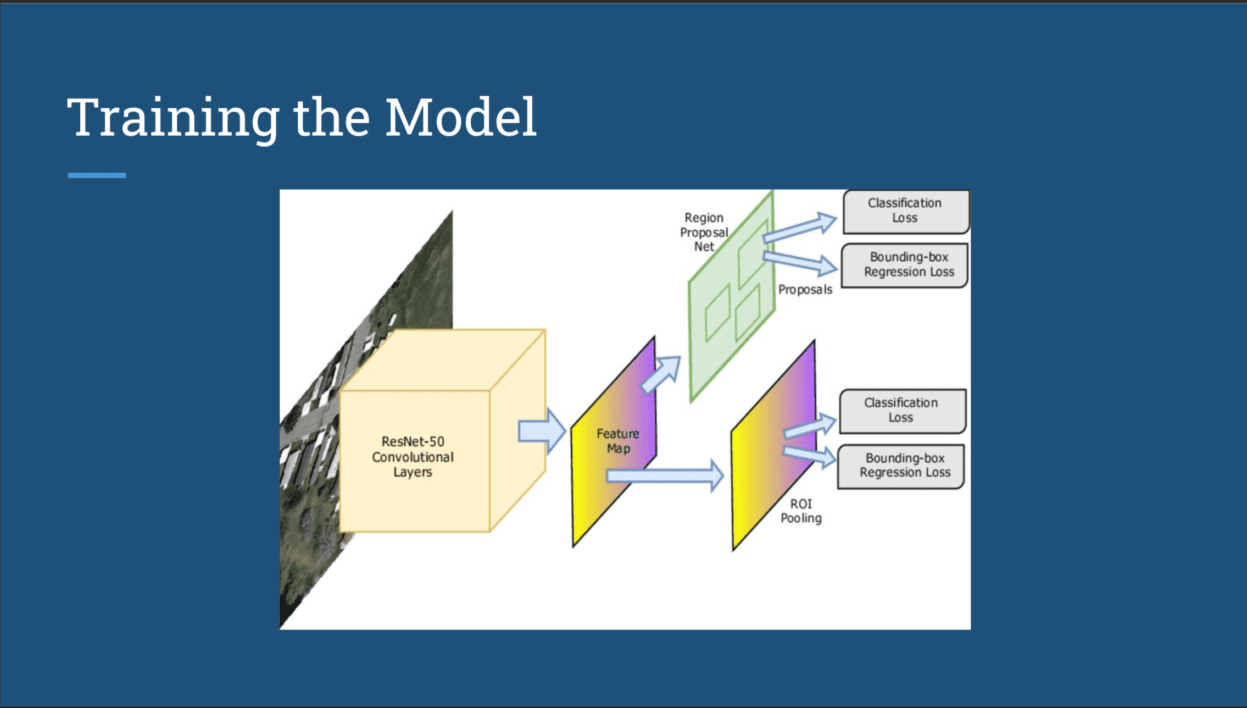

Training the Model

The model was developed using a program called LabelBox. This was a great option for the team because it allows everyone to label images in order to train the model on an online platform. The collaborative and intuitive nature of LabelBox made it accessible to all members of the project as well as members of the CSULB Shark

Developing the User Interface

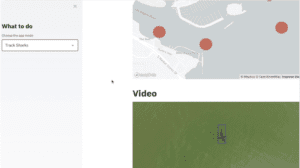

The user interface was created on the application platform, Streamlit. The primary client for this application is CSULB Shark Lab. They will directly test the product and give feedback for improvements.

A screenshot of the Streamlit application for researchers to track sharks. This option “Track Sharks” gives them location display on a map as well as detected shark frames from their videos.

A drone shot labeled with Labelbox and depicting surfers off the coast of Long Beach.

This project is sponsored by Nic and Sara Johnson.

Results

Object Detection Model

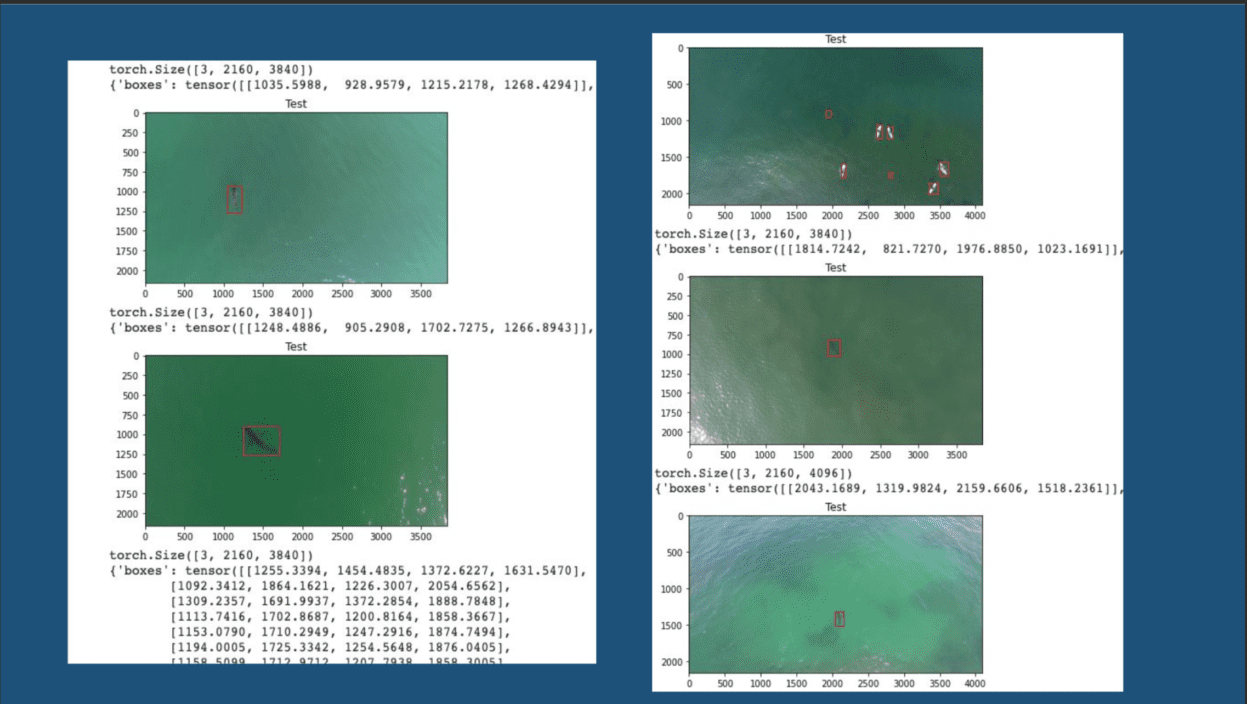

The object detection model was created through deep learning. This discipline feeds thousands of labeled images to the model to train it to distinguish and recognize objects in the Ocean. The most important objects to this project are sharks, surfers and swimmers.

However, the model is also trained to recognize white sharks, leopard sharks, dolphins, boats, surfers, boogie boarders, waders and swimmers. The model has been trained with 1,329 images so far and has a mean average precision of 0.822 and average recall of 0.801 computed with an Intersection Over Union Threshold of 0.50 over all object classes.

Real Time Shark Spotting

Use of a drone with a neural accelerator would allow the program to be implemented in real time to shark spots and alert lifeguards or beach goers. While this is not the main objective for this Summer, it is something that is being explored but is not feasible for this Summer due to limitations including battery power and expense.

Metadata Extraction and Implementation

No matter how accurate our model performs, if we do not know where the shark spotting occurred, it would not be useful in any practical setting. Because of this, it would be very useful to have access to GPS location data when a shark sighting occurs. Other pieces of information that would be of value include how close to any people, as well as how far offshore the sharks are, which would require a way to extract ground distances from aerial footage. For the purposes of this project, data will be collected using DJI Phantom 3 and 4 drones, and these drones do keep track of GPS location and altitude of the drone. Getting access to this information programmatically during the flight, as well using it to estimate the other pieces of information, are crucial to use any findings in a real-world situation.

System Design and Architecture

The most important request from CSULB Shark Lab researchers is for automatic object detection, categorization and labeling of sharks in drone footage. To enhance their experience and research efficiency, the user interface provides a summary page with all statistics from the video, including counts of animals, people, and other objects that appear in the footage.