Our Team

Wesley Khademi

COmputer Science

Wesley is a fifth-year undergraduate student studying Computer Science with a minor in Mathematics. After graduation, he plans on pursuing a PhD beginning Fall 2021. Wesley’s general research interests are in Deep Learning and Computer Vision with particular interests in scene understanding, generative models, and unsupervised learning.

Clara Brechtel

biomedical engineering

Clara is a fifth-year undergraduate student studying Biomedical Engineering, planning to graduate this December. Starting Fall 2021, she plans to pursue a Masters in Computer Science with a focus in biomedical data science. She is interested in working at the intersection of computer science and biology, where she can apply modern computing to advance scientific discoveries and access to healthcare.

Dr. Jonathan Ventura

computer science & Software engineering

Dr. Ventura is an assistant professor in the Department of Computer Science and Software Engineering at Cal Poly, San Luis Obispo. He earned his Ph.D. in Computer Science from the University of California, Santa Barbara in 2012. His research focus is computer vision: making computers “see.” He is especially interested in 3D computer vision, deep learning, and biomedical image analysis.

Acknowledgements

We would like to thank the Cal Poly College of Engineering, SURP coordinators, and the NIH for making this project possible, along with our advisor, Dr. Ventura, for proposing this project and advising us throughout the summer.

Learning to Denoise Low-Dose CT Scans

Problem Statement

Low-dose CT imaging procedures are under exploration in medical practice to limit patient radiation exposure. However, CT scan images acquired at low-dose contain significantly more noise than full-dose CT scans which could lead to medical errors.

Introduction

Computerized Tomography (CT) scans are one of the most common medical imaging modalities. This technology combines many x-ray measurements to produce a volumetric reconstruction of internal organs and bones. CT imaging is a critical component of diagnosing, treating, and monitoring patients. With the increased use of CT technology comes greater patient exposure to harmful radiation. One method to mitigate this risk is a low-dose imaging protocol that limits patient radiation exposure by decreasing the x-ray tube current and exposure time. This approach effectively protects the patient from radiation, but sacrifices image quality due to increased noise at low-light conditions. Compromising the quality of CT scan images could catastrophically impact medical decisions. Therefore, we propose a self-supervised deep learning model to denoise raw CT projection data before reconstruction in order to recover a clean CT image.

Experiments

We evaluate our method on the LoDoPaB-CT dataset which consists of pairs of noisy projection data of size 1000×513 and clean reconstructed CT images of size 362×362. Noisy (low-dose) projection data is simulated by transforming the clean CT images to the projection data domain and adding Poisson noise. The dataset contains 35,820 training pairs and 3,553 testing pairs.

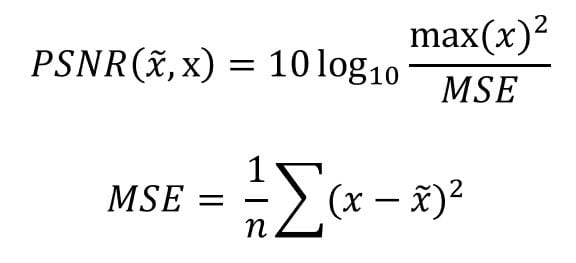

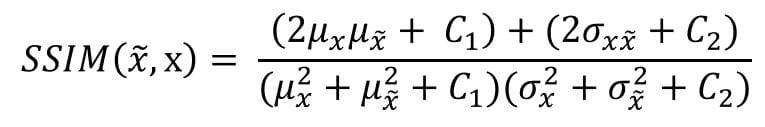

To evaluate our method, we use two metrics which measure image quality between the ground truth image and denoised image. First, we use the peak signal-to-noise ratio (PSNR) which is a commonly used metric for evaluating how well an image was denoised. Higher PSNR indicates better denoising quality. We also evaluate our method on the structural similarity index measure (SSIM) which is a metric for computing perceived quality in an image by comparing structural information within two images. Higher SSIM indicates better perceived quality. All metrics are measured within the CT image domain.

Peak Signal-to-Noise Ratio (PSNR):

Structural Similarity (SSIM):

Methods

Self-Supervised Denoising

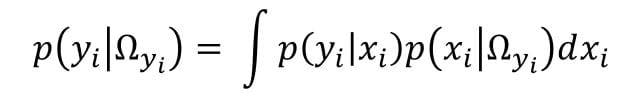

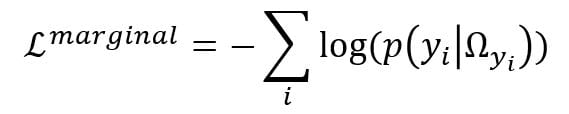

The goal of denoising is to predict the values of a “clean” image x = (x1 , … , xn) given a “noisy” image y = (y1 , … , yn). Let Ωyi be the neighborhood around pixel yi, excluding yi. Given independently distributed noise and that a clean pixel is dependent on its neighborhood, we can learn a blindspot neural network [1] to output a prior belief of the clean pixel p( xi│Ωyi ). Since we only have observations to yi for training, we can connect yi to Ωyi by marginalizing out the clean value xi:

Where p( yi│xi ) is our noise model and p(xi │Ωyi ) is our prior. Now we can train a model by minimizing the negative log likelihood:

Once trained we can obtain an estimate of the clean value xi given its neighborhood Ωyi .

Noise Model & Prior

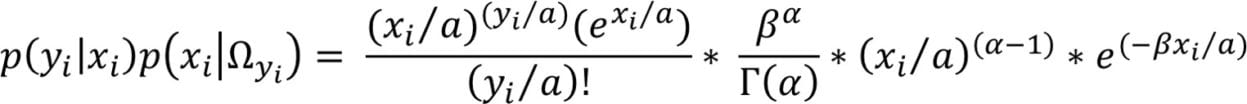

We model p( yi│xi ) as Poisson, yi ~ aP(xi /a), and our prior as a Gamma distribution, Γ(α, β), which is the conjugate prior for the Poisson distribution. Then we have:

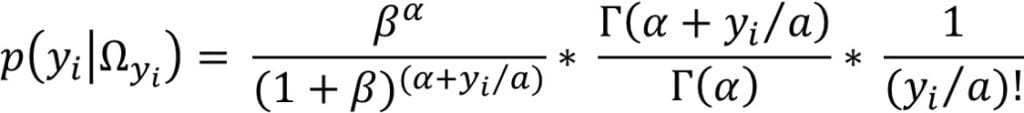

Integrating this over all x gives:

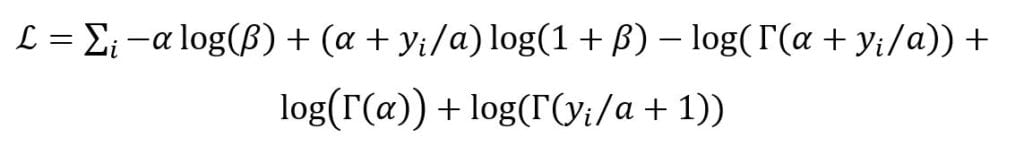

Our loss that we will minimize then becomes:

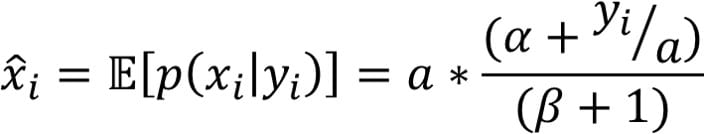

Since our blindspot neural network is only based on Ωyi , at test time we must incorporate information from yi by computing the posterior mean estimate:

The expected value of the posterior, x̂i , is used as our clean estimate of xi.

Results

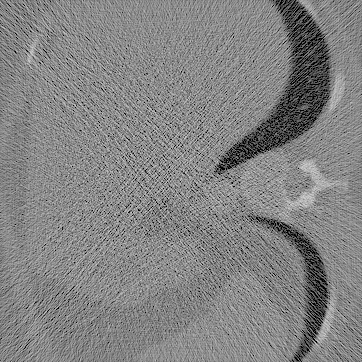

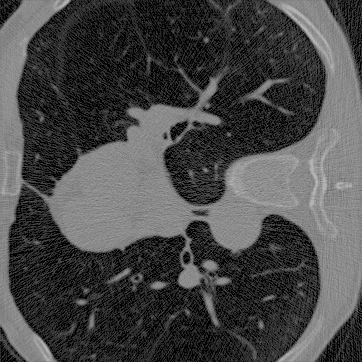

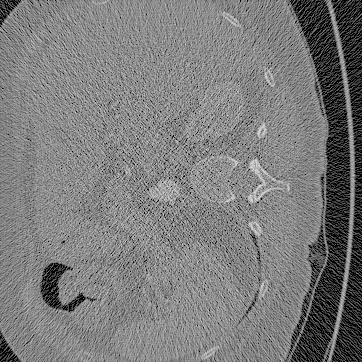

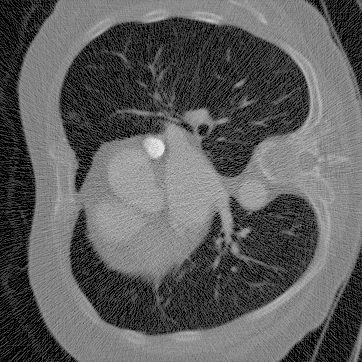

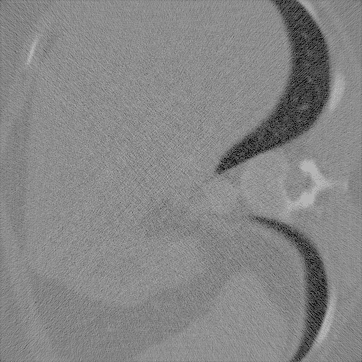

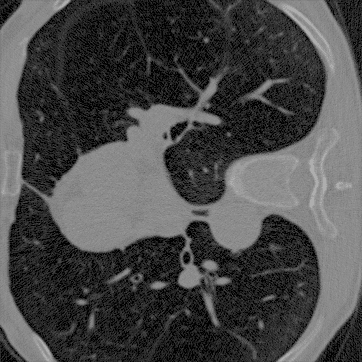

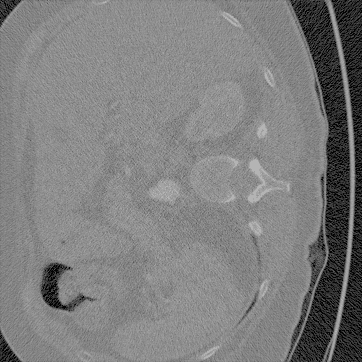

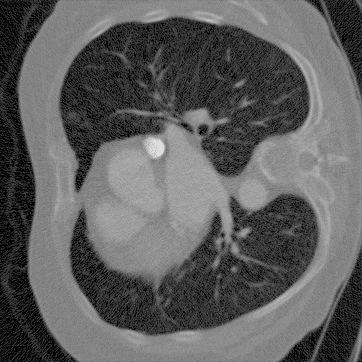

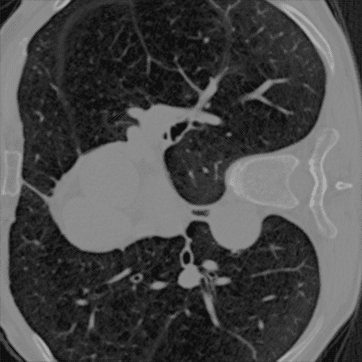

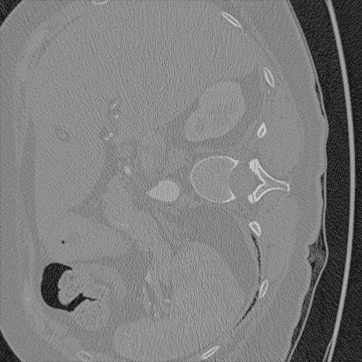

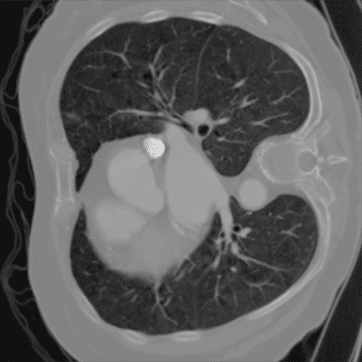

The following noisy and denoised images have been reconstructed by applying a general filtered-back projection algorithm to the noisy and denoised projection data. The reconstructed ground truth images are provided by the LoDoPaB-CT dataset. We evaluated five randomly sampled paired images from the test dataset by calculating the PSNR and SSIM compared to the ground truth.

1

2

3

4

5

Noisy

Denoised

Ground Truth

This work was supported in part by the National Institutes of Health under award number 1R15GM128166-01.

Results

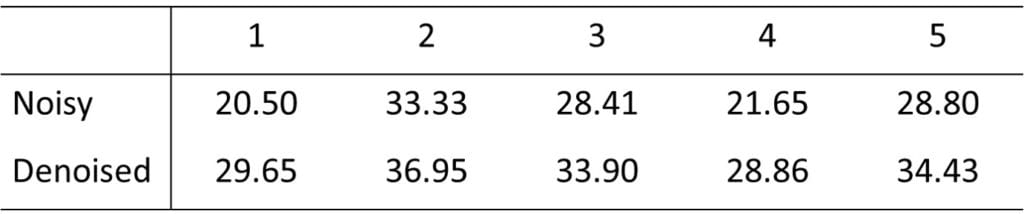

PSNR:

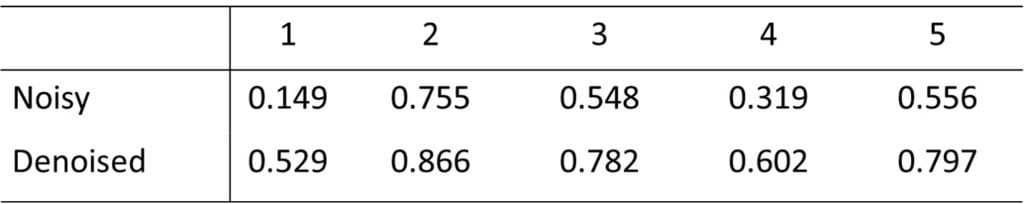

SSIM:

The results show that denoising is increasing both the PSNR and SSIM of the reconstructed images. The average PSNR is 26.54 ± 4.80 and 32.76 ± 3.05 for noisy and denoised reconstructions, respectively. The SSIM of the noisy and denoised images is 0.465 ± 0.210 and 0.715 ± 0.128, respectively. These values are directly comparable to the ground truth, which has an SSIM of 1. To confirm the effectiveness of denoising, we need to evaluate a larger sample of reconstructed images. However, these metrics and visual appearance of the results show that the denoised images contain less noise than the original noisy images.

Future Directions

Since our method operates in the projection data domain small inaccuracies in denoising can result in large artifacts in the image once reconstructed into the CT image domain. Because of this, most self-supervised methods perform denoising in the CT image domain which breaks the assumption of independently distributed noise as the noise typically becomes correlated during the reconstruction algorithm. Future work lies in extending our model to handle structured or correlated noise so we can perform denoising with our method directly in the CT image domain rather than the projection data domain.

References

- Samuli Laine, Tero Karras, Jaakko Lehtinen, and Timo Aila. High-quality self-supervised deep image denoising. In Advances in Neural Information Processing Systems, pages 6968-6978, 2019.