About Me

John Waidhofer

Computer Science

From graphics to machine learning, my projects focus on interactive visual experiences. As a Blended Master’s Student at Cal Poly SLO, I’m interested in computer vision research because of its potential in automation and creative work. I also love graphics and enjoy coding visual effects simulations in my free time. I built the evaluation pipeline for PanoSynthVR to help Professor Ventura and his colleagues validate their work as they build a system that effectively creates VR worlds from a single panoramic image. In the future, I would like to continue iterating on panorama synthesis and make this tool robust enough for consumer use.

Acknowledgements

Thanks to the Cal Poly Office of Student Research for their financial support which made this project possible. Thank you Professor Ventura for insightful advice, open collaboration and inspiration throughout the summer.

Experience PanoSynthVR

PanoSynthVR is a panoramic view synthesis machine learning model that constructs 3D VR environments from a single panoramic image. This allows creators to use consumer-grade camera technology to immerse viewers in a scene which would have required much more expensive hardware and time to produce without this model. The evaluation pipeline that I worked on this summer created the input panoramas to produce similar scenes as the content seen in the video.

Panoramic Synthesis Evaluation Poster

Abstract

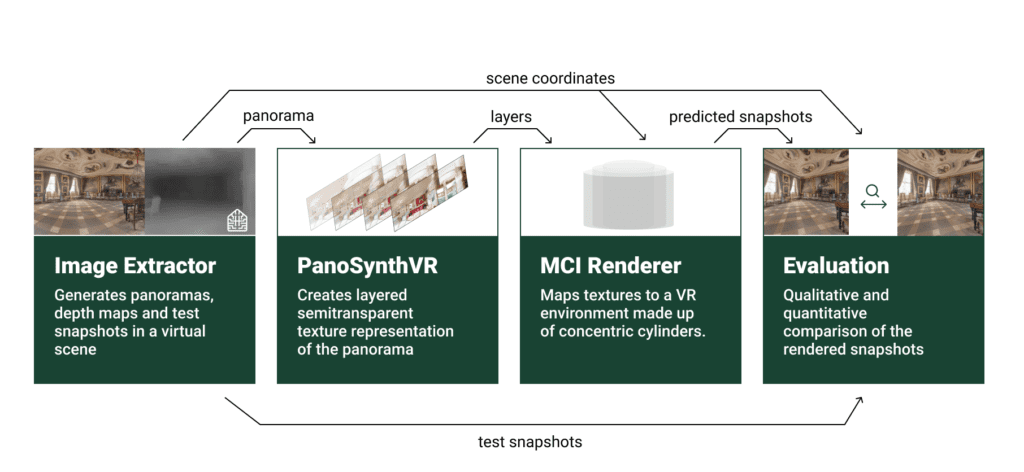

We developed a data generation and evaluation pipeline for PanoSynthVR: a machine learning approach to panoramic view synthesis. The PanoSynthVR model generates immersive VR scenes from a single panoramic image, significantly lowering the barrier to VR content creation. Our work uses Habitat Sim by Facebook Research to further analyze the robustness of PanoSynthVR through synthetic data extracted from 3D environments. We compare the disparity maps and panoramic images generated in Habitat Sim to the output of a multi cylinder image (MCI) renderer, which uses the textures produced by PanoSynthVR to emulate an environment. This system provides an accurate, rapid and extensible framework for evaluating and improving panoramic computer vision models.

Pipeline

The evaluation pipeline uses Habitat Sim to generate the initial panorama and the true snapshots. PanoSynthVR is tasked with producing a prediction snapshot from the initial panorama and a pose (translation + rotation) by rendering a multi cylinder image representation of the scene. By comparing the similarity of the true and predicted snapshots, we can evaluate PanoSynthVR.

Evaluation

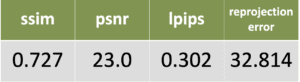

Four hundred PanoSynthVR renders were compared to the corresponding Habitat Sim renders using four image quality metrics. Half of the snapshots had a uniformly distributed offset between -10 cm and 10 cm, while the other half had an offset with a magnitude of 50 cm. The former set is within the expected MCI rendering distance while the latter pushes the bounds of the renderer. The following metrics compare the images on pixel-based and human perception accuracy:

- Structural Similarity Index (ssim)

- Peak Signal-To-Noise Ratio (psnr)

- Learned Perceptual Image Patch Similarity (lpips)

- Reprojection Error

The table below shows the average value of each metric:

References

Richa Gadgil, Reesa John, Stefanie Zollmann, and Jonathan Ventura. 2021. PanoSynthVR: View Synthesis From A Single Input Panorama with Multi-Cylinder Images. In ACM SIGGRAPH 2021 Posters (SIGGRAPH ’21).

Andrew Szot et. al. 2021. Habitat 2.0: Training Home Assistants to Rearrange their Habitat. arXiv:2106.14405

Image Extraction

To make rapid training and testing feasible, we created a virtual panorama extractor by extending Habitat Sim. Our module creates a virtual agent that extracts cylindrical panoramas and square snapshots from a dataset of virtual environments. Not only does this provide precise disparity estimation, but it also allows researchers to use other 3D environment datasets.

WebGL Renderer

The PanoSynthVR in-browser renderer allows users to enter panoramic scenes via a VR headset or a flatscreen device. We used mesh instancing to improve drawing performance and reduce the memory footprint to one tenth of the previous renderer. This allows the renderer to function significantly better on low power devices.

Datasets

We sampled from the following scene libraries to generate the evaluation datasets:

- Replica

- Matterport Habitat

- Gibson

- Habitat Test Scenes